Learning with Advice

Overview

Knowledge-based Probabilistic Logic Learning (KBPLL) learns a model using training data and expert advice, extending Relational Functional Gradient Boosting (RFGB).

Knowledge and advice are interchangeable in this context, and both are specified by label preferences. Label preferences are a set that are more preferred (and therefore more likely) than another set. A conjunction of literals in logic specifies a set of examples to which the label preferences should apply.

For example:

- For the clause:

Patient(x) && BloodPressure(x, HIGH) && Cholesterol(x, HIGH)

- The preferred label is:

{Heart Attack}

- The non-preferred label is:

{Stroke, Cancer}

This advice says that patients with high blood pressure and high cholesterol are more likely to have a heart attack and less likely to have cancer or a stroke.

The key notion behind RFGB is to calculate the current error (gradient) in the model for every example and fit a tree to that error. By adding that tree to the current model, the algorithm is pushed toward correcting its error. In order to incorporate expert knowledge, we introduce an advice gradient that pushes preferred labels toward 1 and non-preferred labels toward 0.

Advice

Advice preferences are specified in a single file, and each piece of advice is specified in three lines.

- The first line contains the preferred label(s), preferred label(s), preferred label(s)

- The second line contains the non-preferred label(s), non-preferred label(s)

- The final line contains the path to the file that specifies advice clauses. These clauses define the examples where the advice should apply.

The advice file could contain multiple pieces of advice, each read in three line chunks.

Example:

heartattack

stroke,cancer

C:\path\to\advice\file.txt

In the binary case, heartattack and !heartattack can be used as the preferred/non-preferred labels.

The file defining the clauses must use the following format:

4.0 target(Arg1,...,ArgN) :- predicate1(...) ^ predicate2(...) ^ ... predicateN(...).

-4.0 target(Arg1,...,ArgN) :- !.

The file must define a clause for every possible example.

- Clauses lead by a positive value (use 4.0) define examples where the advice will apply.

- Clauses lead by a negative value (use -4.0) define examples where the advice will not apply.

- The cut (!) is used in the last clause to define all possible examples not covered by the previous clause.

Advice is compiled into a single value for every example. This is written to the “gradients_target.adv_grad” file in the training directory. It is useful to check this file to ensure that there are non-zero values.

KBPLL Parameters

Two key parameters: -adviceFile and -adviceWt

-

-adviceFileshould be set as the path to the file that contains the advice. Specifying a value for this parameter should ensure that KBPLL runs correctly. -

-adviceWtcontrols the trade-off between the gradient with respect to the training data and the gradient with respect to the advice. 0.5 is the default value, but this parameters only needs to be specified if you want to change it.

Download

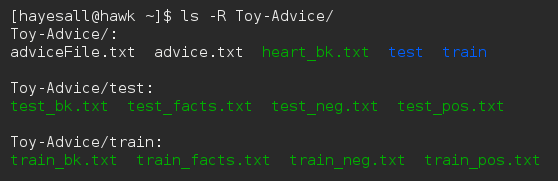

Here is a basic dataset to help with understanding advice and its associated file setup:

Download: Toy-Advice.zip (27.5 KB)

md5sum:7ad05a6cfa3b9cde55a96669cb94ed15

sha256sum:df60f5399a41ff8ef38f136366a55e4886d0af8705bfce55b761710a1f3848c7

advice.txt:

ha

!ha

adviceFile.txt

adviceFile.txt:

-4.0 ha(A) :- chol(A, 4).

-4.0 ha(A) :- chol(A, 3).

4.0 ha(A) :- !.

Train with advice using:

java -jar v1-0.jar -l -train train/ -target ha -adviceFile "advice.txt" -trees 3

Test with:

java -jar v1-0.jar -i -model train/models/ -test test/ -target ha -trees 3

References

- Odom, P.; Khot, T.; Porter, R.; and Natarajan, S. 2015. Knowledge-based Probabilistic Logic Learning. In AAAI.

- Odom, P.; Bangera, V.; Khot, T.; Page, D.; and Natarajan, S. 2015. Extracting Adverse Drug Events from Text using Human Advice. In AIME.